“Half the money I spend on advertising is wasted; the trouble is, I don’t know which half.”

Most marketers know John Wanamaker’s words, and more than a century later, the problem still resonates.

Marketing teams have more tools and data at their disposal than ever before, but the challenge of measuring performance remains.

In recent years, measurement methods have changed rapidly. Privacy rules, platform restrictions, and fragmented customer journeys have all reshaped the landscape.

Yet, many teams continue to lean on familiar approaches like last-click attribution or default analytics models. These are simple to use, but they often give a narrow or incomplete picture of what’s working.

This blog will show how to move beyond those limits. You’ll see how to get a unified view of marketing performance, measure impact more accurately, and use those insights to shape stronger campaigns in 2026.

We cover:

💡 Pro Tip

The future of marketing measurement isn’t about relying on fragmented, incomplete ad platform data. It’s about unifying performance insights to give every channel fair credit, understand how they work together to drive results, identify diminishing returns, and uncover the optimal budget allocation. Ruler Analytics makes this possible with its first-party multi-touch attribution and machine learning technology.

Book a demo to see how it does it

Most marketers start by looking at website traffic. Tools like Google Analytics make it straightforward to see which channels drive visits and how those visitors behave once they arrive.

Attribution models such as last click or data-driven attribution are often used to assign credit for conversions, helping teams understand which touchpoints influenced results.

Ad platforms also play a central role. They provide conversion tracking for individual campaigns and report on metrics like cost per acquisition or return on ad spend. This data is then used to refine targeting and guide future budget allocation.

For years, the core measure of success has been conversions, usually digital ones such as form fills, sign-ups, or purchases. Marketers have relied on attributing these conversions back to specific channels or campaigns to justify spend and plan strategy.

Marketing measurement is at a crossroads. Shifts in the data privacy landscape and changes in technology have cut into the visibility marketers once relied on.

Methods and tools that were considered industry standard just a few years ago are no longer reliable. To get accurate and defensible insight, teams need to move past the status quo and invest in more robust, long-term solutions.

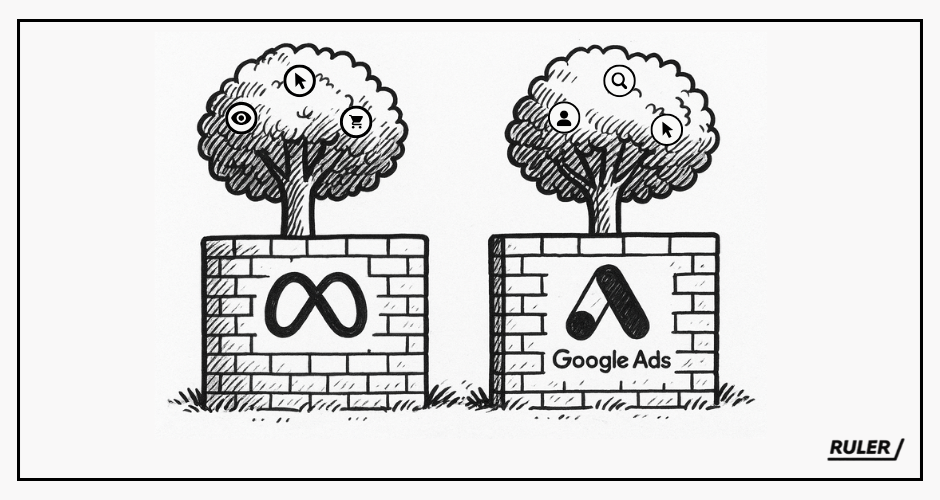

Many marketers default to the data provided by the platforms they advertise on. It’s easy to access, built-in, and often detailed enough to feel complete.

But it carries a fundamental flaw. Each platform only sees what happens within its own walls.

Facebook reports on Facebook touchpoints. Google reports on Google touchpoints. Neither captures the role of the other.

Every platform claims more credit than it deserves. Add up the conversions from each, and the total usually exceeds your actual results.

For example, Facebook might report 250 conversions, while Google reports 280. But your CRM or sales data shows only 300. The difference is duplication, Facebook, Google, and even your email platform each claiming the same sale.

The problem is compounded by inconsistent attribution rules.

Meta defaults to a 1- or 7-day click window and a 1-day view-through window. Google Ads offers click windows ranging from 1 to 90 days. TikTok allows 1, 7, 14, or 28-day clickthrough attribution and variable view-through options.

On top of that, platforms often define or calculate similarly named metrics in different ways. The result is a fragmented picture that makes true comparison across platforms almost impossible.

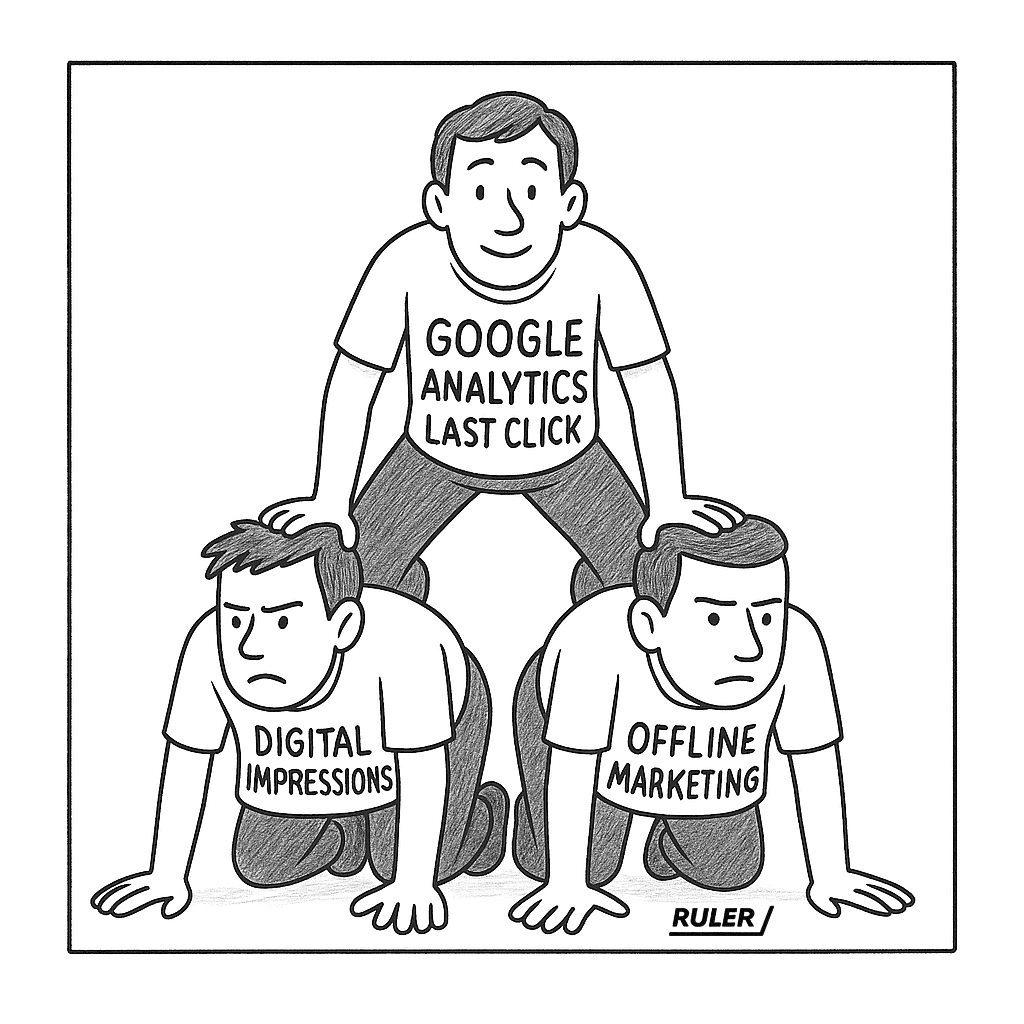

To try to reconcile these inconsistencies, many marketers turn to Google Analytics. In theory, GA4 provides a more neutral view, but in practice GA4 introduces its own restrictions.

It only offers two attribution models: last click and data-driven attribution. The latter is a black box, and other multi-touch models that once existed have been removed.

Attribution windows are capped at 90 days. For B2B businesses with long sales cycles, or ecommerce brands selling high-consideration products, that’s often too short.

GA also fails to account for impression-based activity. It tracks deterministic actions, clicks, while ignoring view-through interactions. This is a major gap, given that studies suggest for every click, there are 10 to 50 impressions shaping decisions in the background.

This creates a vicious cycle. GA doesn’t measure impressions, so marketers return to platform reporting for those signals. But platform data is duplicated, inconsistent, and subject to short attribution windows.

In the end, many organisations overweight the value of lower-funnel channels where evidence is clearer, and underinvest in awareness-building activity at the top of the funnel. The long-term impact is a weaker brand, limited reach, and constrained future growth.

Data privacy laws have further complicated measurement. Apple’s iOS 14.5 update in 2021 marked a turning point.

With more than 80% of users opting out of tracking through the “Ask App Not to Track” feature, platforms such as Meta lost much of their ability to measure view-through conversions via third-party cookies.

That gap continues to widen as user consent becomes a requirement in more markets.

Consent itself introduces further discrepancies. If a user clicks through from a Google Ad but declines analytical cookies, Google Analytics won’t capture their activity.

If the same user accepts advertising cookies, Google Ads may still record the interaction. That divergence makes cross-platform reconciliation even more difficult.

These changes don’t only affect measurement, they also affect performance. With fewer data signals available, ad algorithms can’t target audiences as effectively, reducing efficiency and inflating acquisition costs.

And the changes aren’t slowing down. Google Chrome is knuckling down on privacy, following Safari and Firefox, making it harder to track user behaviour online.

Solving today’s measurement challenges requires more than tweaking settings in Google Analytics or comparing platform reports.

To achieve unified marketing measurement, marketers need a flexible, data-driven approach that integrates multiple methodologies.

Platforms are siloed, attribution settings differ, and Google’s black-box models leave out crucial signals like impressions. Relying on these methods alone leads to distorted budget decisions and a skewed view of performance.

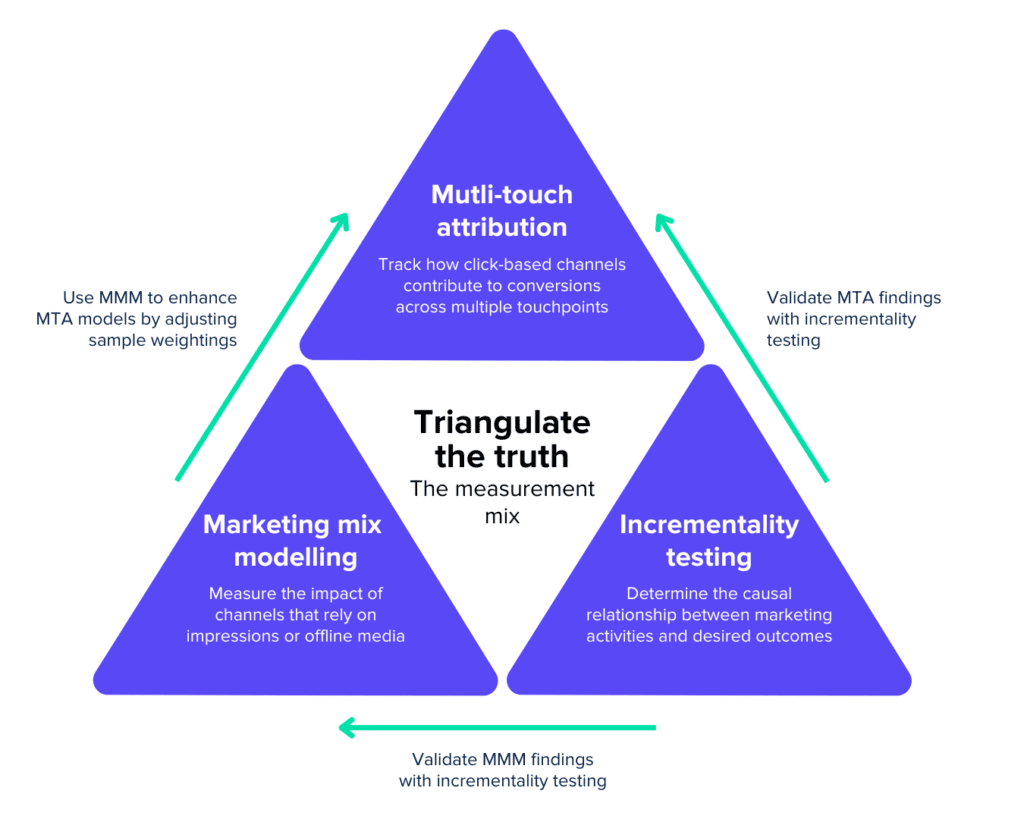

A unified approach combines three key elements:

Together, these create a fuller picture of what is and isn’t working, bridging the gaps that each method has in isolation.

To recap, marketers have long relied on Google Analytics to track website traffic and on-site events.

While useful, it provides an aggregated view that misses critical context. It doesn’t integrate cleanly with CRM systems or external platform data, leaving a fragmented picture of performance.

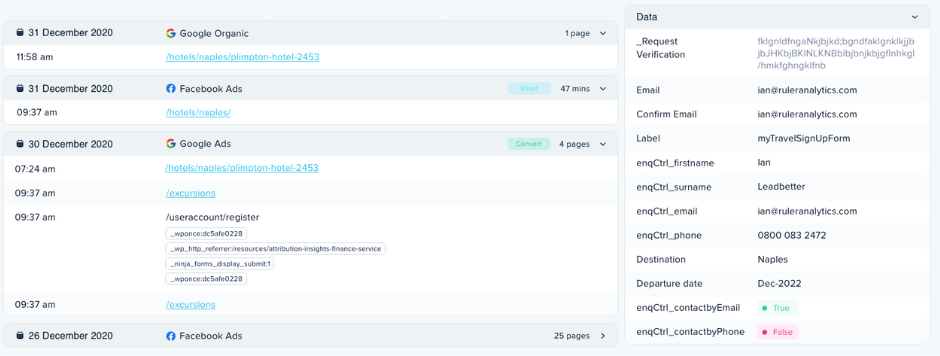

First-party multi-touch attribution closes this gap. By integrating tracking tags, CRM records, and advertising platform data, organisations can build a holistic view of customer behaviour.

Every touchpoint, page views, traffic sources, UTM parameters, and conversion events, can be captured, cleaned, and matched to identifiers like email addresses or phone numbers.

This produces a unified record of the journey that links marketing activity directly to business outcomes.

When a prospect becomes a lead, their marketing interactions are passed into the CRM, ecommerce platform, or internal tools. This allows businesses to see not just where leads come from, but how those leads progress through the funnel.

Patterns quickly emerge. For example, Meta ads might generate high lead volume but low close rates, while Google search campaigns could produce fewer leads that are far more likely to convert.

With this closed-loop visibility, marketers move beyond surface metrics like cost per lead and start optimising for revenue impact.

In attribution tools such as Ruler, models can be adjusted depending on the objective. A first-click model might better suit upper-funnel campaigns, while comparing models side by side can highlight which channels exert the greatest influence across the journey.

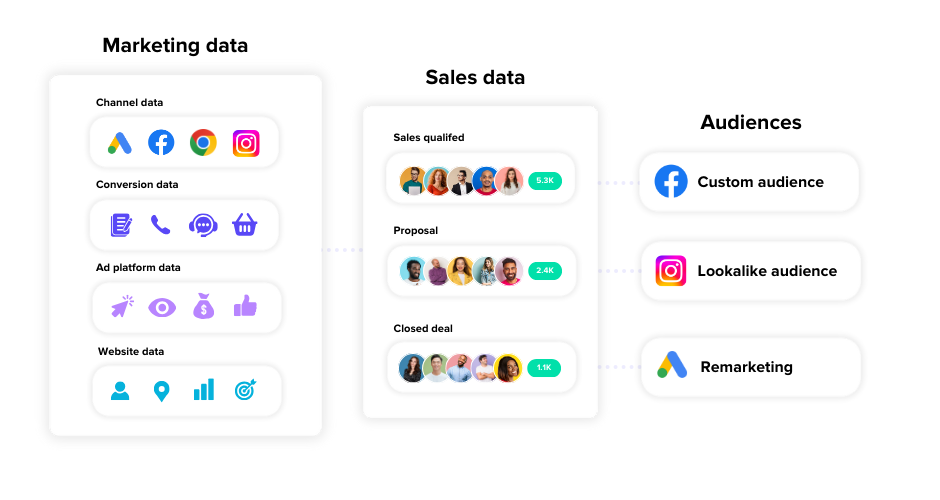

The benefits extend further when this data is synced back into ad platforms. Instead of generic retargeting, audiences can be built around CRM stages or repeat purchase behaviour.

It also reduces the problem of duplication, ensuring that channels don’t overclaim credit for the same sale.

First-party multi-touch attribution doesn’t just provide compliance in a world of stricter privacy laws, it equips marketers with the granular insight they need to connect marketing spend with actual revenue and make more confident budget decisions.

It transforms fragmented data into a single source of truth, empowering teams to optimise campaigns based on real business impact rather than surface-level metrics.

First-party multi-touch attribution gives marketers a detailed view of customer journeys, but it can’t account for everything.

Impressions that go unclicked, offline activity such as TV or radio, and external influences like competitor moves or seasonality remain outside its reach.

MMM takes a probabilistic approach, analysing aggregated data over time to estimate how different marketing activities contribute to revenue.

Instead of tracking individual users, it applies statistical techniques, most commonly multivariate regression, to measure the relationship between inputs (media spend, promotions, external factors) and outputs (sales, revenue, leads).

The advantage of MMM is its scope. It evaluates both digital and traditional channels on equal terms and can include up to 30 or more variables simultaneously.

These might cover brand spend, remarketing, awareness campaigns, price changes, seasonality, competitor activity, or even external conditions such as weather.

To avoid overstating effects, campaigns can be grouped by objective, isolating performance for awareness, conversion, or retention.

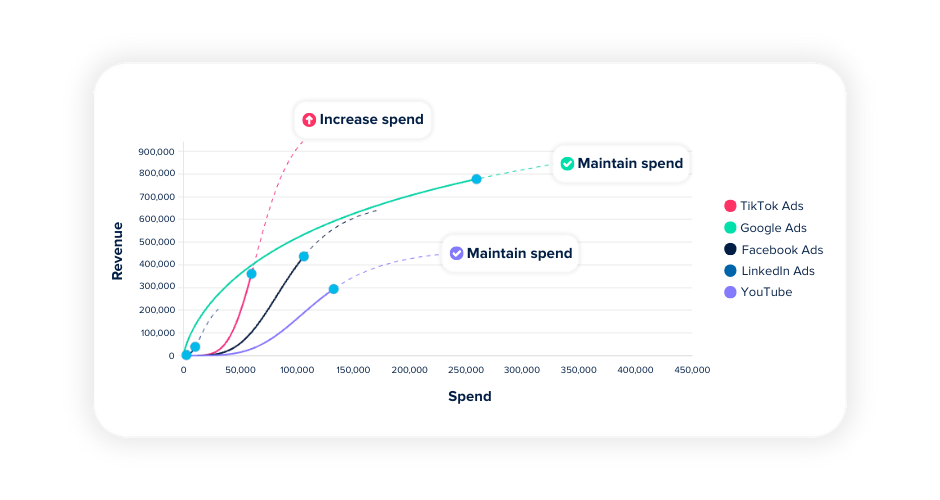

MMM also offers a unique ability to forecast. By running scenario simulations, marketers can see the impact of reallocating budget between channels or increasing investment in a given campaign type.

Diminishing returns curves help identify when additional spend stops delivering proportional growth, making it easier to judge available headroom and avoid waste.

Because the models use aggregated data, they are less affected by privacy restrictions and tracking gaps. They also provide statistical rigour and visual outputs that are often easier to present to finance and leadership teams than user-level attribution reports.

Instead of estimates based on clicks alone, MMM offers evidence-based guidance for both past performance and future planning.

By unifying granular attribution with high-level modelling, marketers gain the best of both worlds.

Attribution explains the details of how campaigns influence journeys, while MMM accounts for the broader, harder-to-track drivers of performance and gives long-term direction on where to allocate spend.

Even with first-party attribution and marketing mix modelling, one question often remains: did the campaign itself drive the result, or would those sales have happened anyway?

Incrementality testing answers that question.

Also known as lift studies or randomised control trials, incrementality testing isolates the true causal impact of marketing. It works by splitting an audience into test and control groups.

The test group is exposed to the campaign, while the control group is held back. By comparing the difference in outcomes between the two groups, marketers can see the incremental lift created by the campaign.

This method is widely regarded as the gold standard for proving causality. Unlike attribution, which infers relationships between touchpoints and outcomes, incrementality shows direct evidence of cause and effect.

It helps confirm whether a campaign truly influenced results or if conversions would have occurred without it.

However, incrementality has its limits. Like MMM, it works best at an aggregate level rather than for every campaign or channel.

Running tests can also be resource-intensive, requiring carefully designed experiments and a large enough sample size to achieve statistical confidence.

Where incrementality adds value is in validating and strengthening other measurement methods.

For example, MMM might show that Meta campaigns generated 600 sales from $5,000 in spend and suggest there’s more headroom for growth.

Multi-touch attribution then zooms in, revealing how specific campaigns contributed to journeys, though it may miss some sales or undervalue impression-based influence.

Incrementality testing provides the final check: it confirms how many of those 600 sales were truly caused by Meta, and how many would have happened without it.

By working alongside attribution and mix modelling, incrementality brings confidence to decision-making.

Attribution provides tactical guidance at the campaign level. MMM supplies the strategic view across all channels and external factors. Incrementality ensures that both are grounded in causality.

Marketing performance can no longer be understood through a single lens. The days of relying solely on last-click attribution or platform-reported conversions are over. As privacy regulations tighten and tracking limitations grow, marketers must evolve beyond the fragmented, channel-specific data of the past.

The most effective strategies will blend first-party data, marketing mix modelling, and incrementality testing to deliver a unified, credible view of performance. Together, these methods help teams see not only what is working, but why, linking marketing investments directly to business outcomes.

By building a measurement framework that’s resilient to change, marketers can make smarter, evidence-based decisions, prove impact with confidence, and drive sustainable growth well into 2026 and beyond.